A new MOX report entitled “Latent Dynamics Networks (LDNets): learning the intrinsic dynamics of spatio-temporal processes” by Regazzoni, F.; Pagani, S.; Salvador, M.; Dede’, L.; Quarteroni, A. has appeared in the MOX Report Collection.

The report can be donwloaded at the following link:

https://www.mate.polimi.it/biblioteca/add/qmox/37/2023.pdf

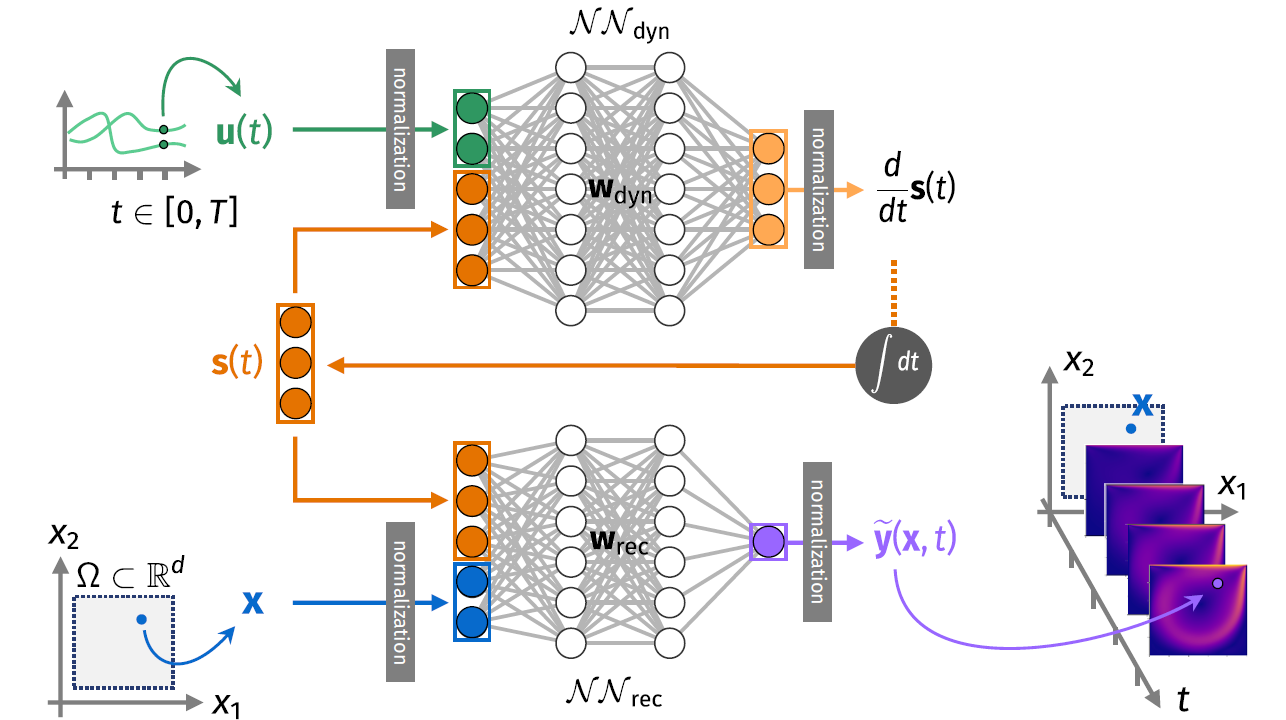

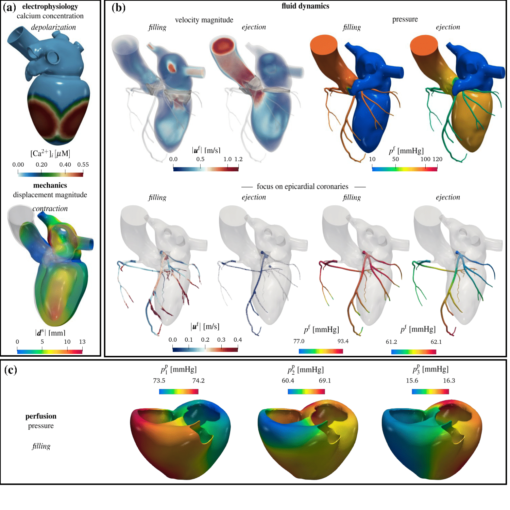

Abstract: Predicting the evolution of systems that exhibit spatio-temporal dynamics in response to external stimuli is a key enabling technology fostering scientific innovation. Traditional equations-based approaches leverage first principles to yield predictions through the numerical approximation of high-dimensional systems of differential equations, thus calling for large-scale parallel computing platforms and requiring large computational costs. Data-driven approaches, instead, enable the description of systems evolution in low-dimensional latent spaces, by leveraging dimensionality reduction and deep learning algorithms. We propose a novel architecture, named Latent Dynamics Network (LDNet), which is able to discover low-dimensional intrinsic dynamics of possibly non-Markovian dynamical systems, thus predicting the time evolution of space-dependent fields in response to external inputs. Unlike popular approaches, in which the latent representation o! f the sol ution manifold is learned by means of auto-encoders that map a high-dimensional discretization of the system state into itself, LDNets automatically discover a low-dimensional manifold while learning the latent dynamics, without ever operating in the high-dimensional space. Furthermore, LDNets are meshless algorithms that do not reconstruct the output on a predetermined grid of points, but rather at any point of the domain, thus enabling weight-sharing across query-points. These features make LDNets lightweight and easy-to-train, with excellent accuracy and generalization properties, even in time-extrapolation regimes. We validate our method on several test cases and we show that, for a challenging highly-nonlinear problem, LDNets outperform state-of-the-art methods in terms of accuracy (normalized error 5 times smaller), by employing a dramatically smaller number of trainable parameters (more than 10 times fewer).