A new MOX report entitled “Reducing the Drag of a Bluff Body by Deep Reinforcement Learning” by Ballini, E.; Chiappa, A.S.; Micheletti, S. has appeared in the MOX Report Collection.

The report can be donwloaded at the following link:

https://www.mate.polimi.it/biblioteca/add/qmox/40/2023.pdf

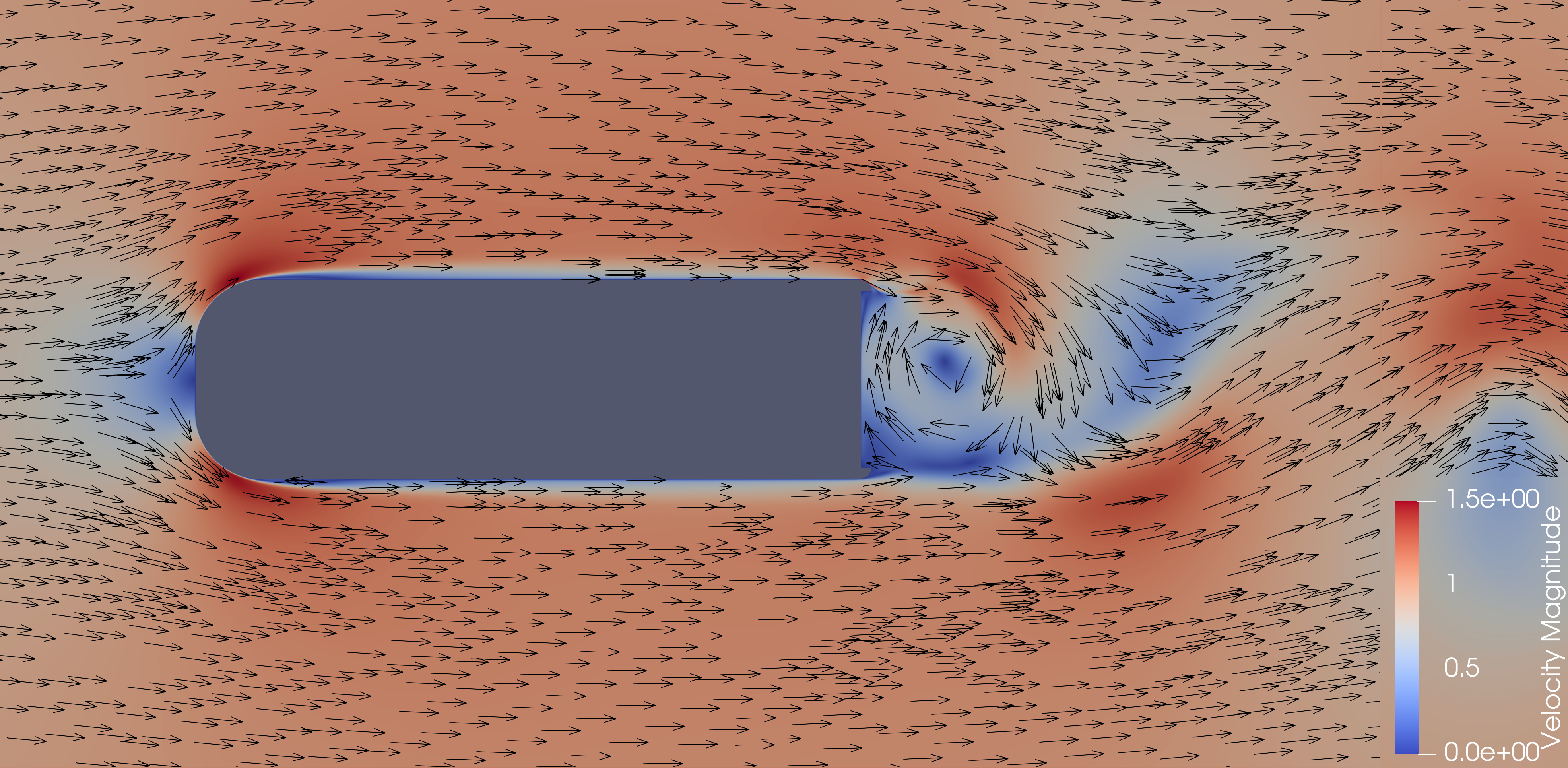

Abstract: We present a deep reinforcement learning approach to a classical problem in fluid dynamics, i.e., the reduction of the drag of a bluff body. We cast the problem as a discrete-time control with continuous action space: at each time step, an autonomous agent can set the flow rate of two jets of fluid, positioned at the back of the body. The agent, trained with Proximal Policy Optimization, learns an effective strategy to make the jets interact with the vortexes of the wake, thus reducing the drag. To tackle the computational complexity of the fluid dynamics simulations, which would make the training procedure prohibitively expensive, we train the agent on a coarse discretization of the domain. We provide numerical evidence that a policy trained in this approximate environment still retains good performance when carried over to a denser mesh. Our simulations show a considerable drag reduction with a consequent saving of total power, defined as the! sum of t he power spent by the control system and of the power of the drag force, amounting to 40\% when compared to simulations with the reference bluff body without any jet. Finally, we qualitatively investigate the control policy learnt by the neural network. We can observe that it achieves the drag reduction by learning the frequency of formation of the vortexes and activating the jets accordingly, thus blowing them away off the rear body surface.